Beyond the AI Agent: When Traditional Solutions Win

Image 1: Tennis Partner: AI Agents don’t improve workflows that are direct or well-known. (Assistance from GenAI✧)

Few would support AI agents as tennis partners, but is an AI agent solution for your problem over-engineering and suppressing value? In this article, we analyze a well-funded but seldom debated topic in this space: AI agents and workflows that are agent-centric (an agentic solution). Using lessons learned through Fyve Labs’ software and development services, we observe that agentic flows are amazing tools for content creation and flow execution, but the majority of business and personal use cases today are better served by more direct, well-defined structures.

Two tests: Two ten second tests to help determine use case fitness for both general automation and AI agents.

Claims: We’ll briefly introduce the relevance for an agentic discussion today.

Comparison: A comparison matrix for agentic and agent-less solutions reviews efficiency, cost, and accuracy with industry guidance.

TL;DR: Jump right to the insights if you need to get the gist in under a minute.

What steps in your problem are ripe for automation? For each step that you uncover, is an agentic solution the right solution? Take the two simple tests below for a high-level assessment.

-

There is a lot of excitement around agents and we read it all to provide the best solution for each problem. Here’s a succinct, no thrills definition of an AI agent: (1) A prompt defining a persona and actions for a domain and task, that (2) attempt to use tools or functions to execute steps in the task, and (3) return a final conversation (or program) after reaching a pre-determined stopping criterion - here’s a good overview. An agentic solution breaks down a textual problem and uses several agents to adaptively answer problem needs. The most popular libraries for implementation today are OpenAI, AG2 (previously AutoGen), LangGraph, CrewAI, LlamaIndex. Feature differentiation is decreasing, so picking a the right library is based on your familiarity with the internal libraries used, a friend with experience, and finding a sample that most aligns with your problem.

This is Only a Test

So you want to add AI and agents to your workflow? Great! Apply these two tests to either affirm agent value or spot unnecessary intermediaries that complicate things without providing real benefits.

The One-second Test

Although first recorded in 2016, Andrew Ng’s reflection below is used as the foundation for this automation test: can a typical worker do this task in one second?

Almost anything a typical human can do with less than one second of mental thought, we can probably now or in the near future automate using AI. The key here is "typical human". Think of it like asking a random stranger on the street. If they could perform the cognitive task at hand in less than 1 second, it's very likely AI can do it as well.

Andrew Ng, HBR 2016

While machine capabilities and output quality will improve over time, this test remains valid. Complex tasks are still challenging for ML and AI automation. The solution? Break those big tasks into smaller, AI-manageable steps.

The Workflow Test

Once you've broken down your solution into one-second tasks viable for automation, evaluate each for complexity: does it follow a well-known pattern or clear action?

If you can immediately describe the steps in your task with “account lookup”, “clustering”, or “statistical averaging”, your solution will gain little value by adding agents or agentic solutions to the mix.

If your steps are “it depends” or “it’s complicated” the intrinsic discovery and planning operations that AI agents add may be a better fit.

Image 2: A two-test decision tree for how to prepare a solution for automation and whether it should use AI agents or traditional methods.

Examples of one second tasks are summarizing recent account activity, classifying a support ticket’s priority, or even translating a text query into a SQL statement. You’ll get the best solution if you can pre-define some of those steps and the persona or job role required to complete them (see tools, functions, and personas). You can also allow an AI agent to reason out your intents, but lower upfront clarity may bring additional resource costs or lower accuracy.

Armed with these two simple tests, let’s look at capability claims for agentic approaches across various domains.

Agentic Claims

While writing this article in early 2025, there exist an overabundance of blogs, medium articles, and LinkedIn feeds with bold claims that AI agents are the future for staff-less offices, instantaneous research, and even get-rich-quick schemes(!). Agentic AI workflows are a control paradigm (reminiscent of MAPE loops by IBM in 2001) to solve textually defined use cases. They are a software pattern in its infancy, but nearing the middle of a hype cycle, combining no-code/low-code goals and deep semantic knowledge provided by LLMs. Just as tennis players don't need instructions for each volley, not every processes needs an agentic analysis. At Fyve Labs, we build these systems for others and continuously scan progress for Innovation Signals in the AI space. Below are three distilled claims often seen in agentic articles.

Faster & Broader Knowledge Domains

AI agents often work faster and with broader reach than traditional knowledge workers. Specifically, a distributed set of many agent processes can run in parallel to search, retrieve, and summarize information. Returning to Andrew Ng’s HBR article, we note that software and speed may not be limiting factors. Talent*, not open source software,* will be required to correctly fit AI systems into a business’ complex adaptive system. Data*, not software*, will drive personalization systems that your customers seek and it will be increasingly protected. This means that AI agents will reduce efforts for data acquisition across domains, but a thoughtful selection of sources and application of the aggregated findings likely requires human interpretation.

Replication of Human Cohorts

Several articles propose an exact replacement of multiple job roles with AI agents to mimic a department hierarchy or conversations between experts. For marketing or sales teams, a rote replication of roles (research, copywriter, CRM injection) lacks customization for each business. Sellers may reject generated leads as unorganized and disconnected from the business or the normal sales channels. Within IT teams, replication of an entire scrum team (project manager, product manger, architect, developer, tester) is also tenuous but results are better aligned to human outputs and less rejected by subsequent users. In roles where human counterparts added little creative- or thought-based contribution (like writing user stories from a document or collecting progress and summarizing), some full-automation solutions (technical issue reporting, discussion, fixing, testing) can successfully multiply the efforts of small teams.

A question to ask of any role replication is: does each new agent role add real value to the overall solution. Why is this important? Borrowing a running analogy, you won’t finish a race any faster with ten marathon runners if they are competing at the same time.

All Automation Tasks Should be Agentic

When a solution is successful it’s hard not to double down expecting similar returns. However, focusing too much on one tool alone leads to inappropriate overuse. To avoid this overuse, let’s review how others are using the same collection of capabilities in automation, agents, and chatbots.

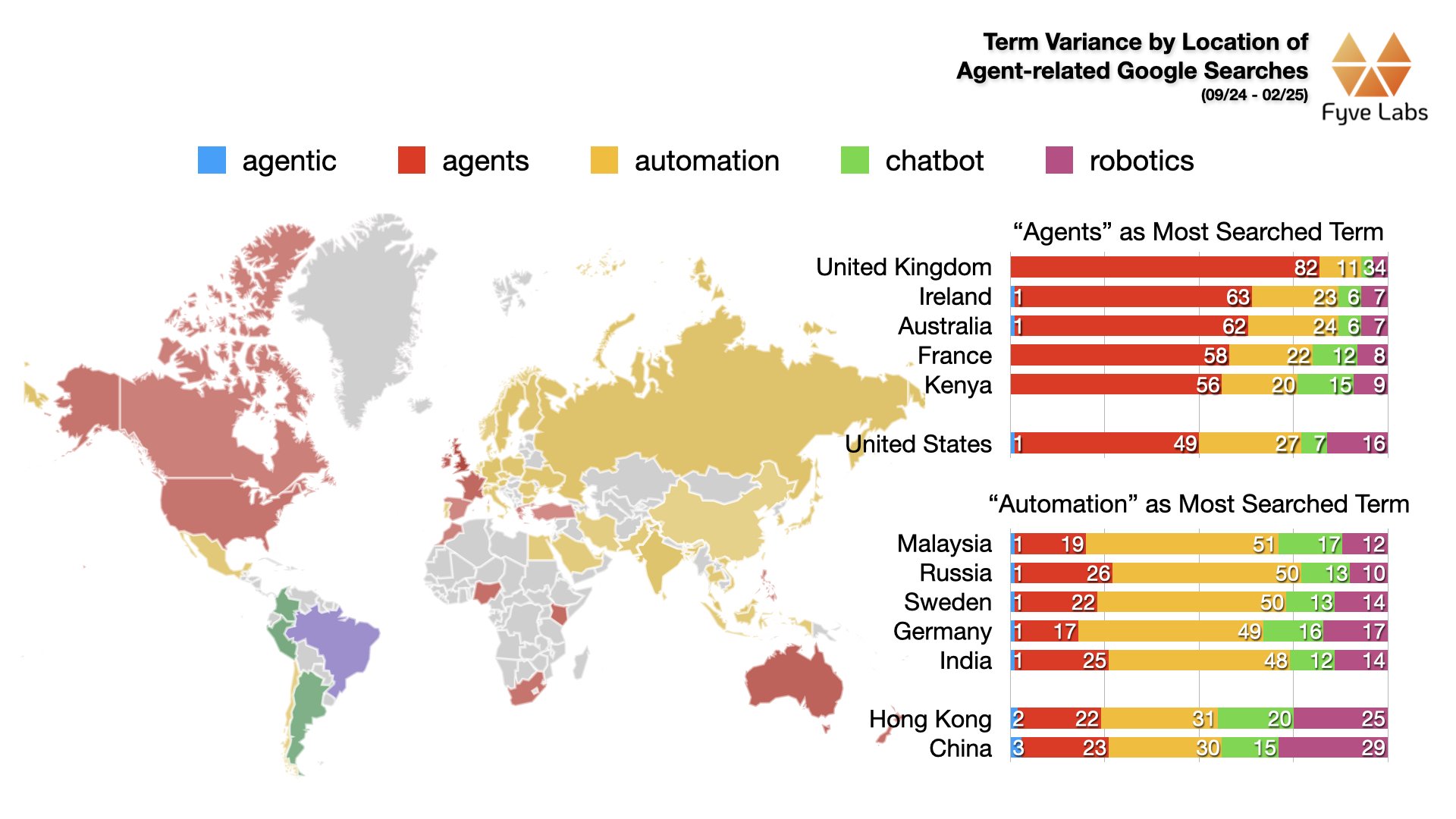

Using a trend analysis of search terms from our Innovation Signals article, we see that regional use of agents, chatbots, and automation is quite different. Looking at two groups (group A favoring “agents” and group B favoring “automation”), alignment to tasks by industry is apparent. Group A (United Kingdom, Ireland, Australia, France, Kenya, United States) leans towards service-based industries such as finance, technology, and creative sectors and favors agents. Group B (Malaysia, Russia, Sweden, Germany, India, Hong Kong, China) has strengths in manufacturing, industrial production, and energy, reflecting a broader base of industrial and economic activities and prefers classic automation. While based only on search trends, we can infer the most likely level of automation for users (both consumer and business) across different regions.

Image 3: Percentage of Agent-related Google Search terms as it varies by location. (Google data)

A related claim is high reusability of tools developed in agentic frameworks. Specifically, the modularization of a solution will allow agents to easily reuse the steps (aka tools, functions, or modules) for future use cases at the exact right time. Consider popular solution steps like looking up support tickets, performing search with a specific search engine, or rebuilding a RAG pipeline. Unfortunately, unless a single solution runs across your business (one database, one API, etc.) each of these steps will require further customization and contextual inputs to work correctly. Looking at support tickets alone, variations exist for (1) internal, external, or proxied customers, (2) how to protect and where to source secure PII data, and (3) what are downstream application needs (rich reasoning, a price-point, customer satisfaction?). All of these variants are hard to capture in a prompt that an agent will consistently understand and answer correctly.

The Comparison

Let's examine specific topics comparing agentic and traditional ML/AI solutions — remember, simpler solutions often prevail.

Cost & Resources

Agents currently rely on textual tokens for inter-communication. This means that every exchange (codifying inputs, sending to an LLM, and recognizing outputs) will have a token and compute cost. Specific to agents, a large cost is the use of reasoning methods like a Chain-of-Thought (CoT) to propose, revise, and restate conclusions incorporated in persona and job role prompts.

Traditional methods also have token costs, but if the input and output is well known, costs to determine tool routing is not incurred. While both methods use prompts, traditional ones have a resource burden for prompt tuning (or model tuning) during training. Traditional methods have costs per per-prompt, not per-execution as with agents. (winner)

Structured Data

Agents use a textual medium, serializing images, audio, and any structured metadata into and out of each communication through additional compute like structure, CoT, instructor. Modern vision and audio models require expensive base64 encoding and inclusion in each context window. Finally, the lossy translation between tokens (AI weights) and text (human language) may degrade signals, especially with quantized models at the heart of AI agents optimization.

Traditional methods can directly predict on binary payloads or pass schema-rich encodings like JSON, yaml, etc. Where required, binary schema protocols like Protobuf are now industry-standard and optimized for high speed, high volume API message passing. Versus agents, binary payloads in traditional methods may not need additional operations for embedding for RAG search or storage. (winner)

Lack of Repeatability and Procedural Tuning

AI agents are challenging to debug and tune. Like all LLMs, agents are driven by a prompt and a numerical seed, so there is little transparency for the internal reasoning model. Layered telemetry capture tools like OTEL now have LLM specific hooks in Langfuse and mlflow to help. But if an agentic persona or job role was defined with low-code tools or only a few sentences, repeating a full agentic response and tuning the workflow may be trial and error.

Traditional methods in ML/AI are built on feedback and supervised signals. Revisiting our workflow test, these methods excel with well-defined inputs and outputs. While a single step in an agentic workflow could be optimized like a traditional method, doing so defeats the purpose of using an agentic solution. Recent libraries like DSPy offer statistical tuning for individual LLM prompts, but multi-agent workflows remain too stochastic to benefit from these optimization techniques. (winner)

Task Routing and Transparency

Fine-tuned “function calling” LLMs enable agents to select tools across a wide set of domains from natural language instructions. However, this doesn't mean agents are sentient or that “an AI agent can think autonomously and solve complex tasks using memory tools and reasoning”. Self-organizing interaction schemes (hierarchical, joint-chat, multi-agent) are improving quickly, but each behavioral adaptation must rediscover its context on every agent execution. (tie)

Traditional methods use well-defined combination schemes for expert voting and model mixing — likely how GPT4 was first built. Traditional methods are often associated with data transparency and achieving single-digit error rates but never sentience. Unlike agent-based LLMs, which struggle with data bias and weaker knowledge retention during fine-tuning, traditional methods often provide more reliable results. (tie)

Summarizing the above discussions into a table, we highlight the strongest points for each topic for both agentic and traditional solutions.

| Topic | Agentic | Traditional |

|---|---|---|

| Cost and Resources | Token overhead for input, output, and reasoning compute in each agent execution | With prompts, token overhead for initial prompt tuning by developers |

| Structured Communication | Text is the medium, as a slower and lossier information encoding than binary data | Using APIs, high-velocity and high-volume capabilities with native format for embedding |

| Repeatability and Tuning | Challenging to debug; detail of agent persona and tasks may be sparse | Founded on supervised signals; easy to train, version, and monitor |

| Tasks and Transparency | Dynamic tool and function selection from task description | Strong data transparency for bias and knowledge testing |

TLDR: Where do AI Agents shine?

Given the infancy of agent-based interactions, we recommend jumping into traditional methods before learning and standing up a full agentic solution. If you jumped here from the intro, check out the reasoning above for more justification.

Agents: Creative tasks that can run for a longer duration, with higher cost, and do not require high accuracy results. OpenAI is now setting another precedent for wait times and result depth and Google proposed an AI co-scientist that fits a similar usage pattern.

Generally, these are harder cognitive tasks but they are executed less frequently like marketing research, or prototyping a novel application or interaction.

For high-quality agentic results, reduce the coordination burden of agents and avoid the use of complex conversations and debates.

Traditional: Traditional methods are the clear winner for cost, efficiency, and tuning of the overall pipeline for performance.

Generally these tasks are complicated automations, like preparing a billing summary, performing issue and ticket and care triage, or providing generative content for fixed path applications.

For performant use of traditional methods in AI scenarios, map out the modular steps of your task with clarity for modular testing and replacement.

In summary, remember these pitfalls: automate simple tasks individually, avoid unnecessary agent debates, don’t force agentic solutions onto structured data problems, and remember that all agentic communication adds token overhead to each execution.

Bringing Agents to Your Customer

Agentic platforms are a new computing paradigm (trend or not) that must be thoughtfully analyzed by your business. In our next Fyve Insights article, we explore the space of serverless compute for all of those great GPU-hungry models you want to run with a Fyve Labs product as a demo.

Part 1: Innovation Signals of Tomorrow’s Tech (CES 2025). We analyze Innovation Signals from CES 2025's emerging technologies (companion robots, AI wearables, and smart automation) to reveal market opportunities.

Part 2: Beyond the AI Agent: When Traditional Solutions Win. Congrats, you just read it!

Part 3: Serverless & Multimodal Generation: Accommodating New Infrastructure. We test some principles in action through real-world serverless architecture and examine a hybrid cloud audio processing implementation.

Part 4: Startup Mortality: Learning from 3 Years of Tech Failures. We'll analyze three years of startup data to surface any trends in technology strategies.

Part 5: SXSW 2025: Innovation Landscape Update. Join us at SXSW as we explore emerging technology trends where we come back to another venue focused on innovation.

Still want more? If you’re ready to leverage user research and statement-of-work based projects for AI solution development, reach out to Fyve Labs directly. We can help to get you started with a suite of integrated chat and governance tools, provide innovation and strategy advice for a project in flight or build everything with you — the research, technology, and advice for strategic protection of future IP